A High Level Overview

A High Level Overview

What is Apache Airflow?

Airflow Principles

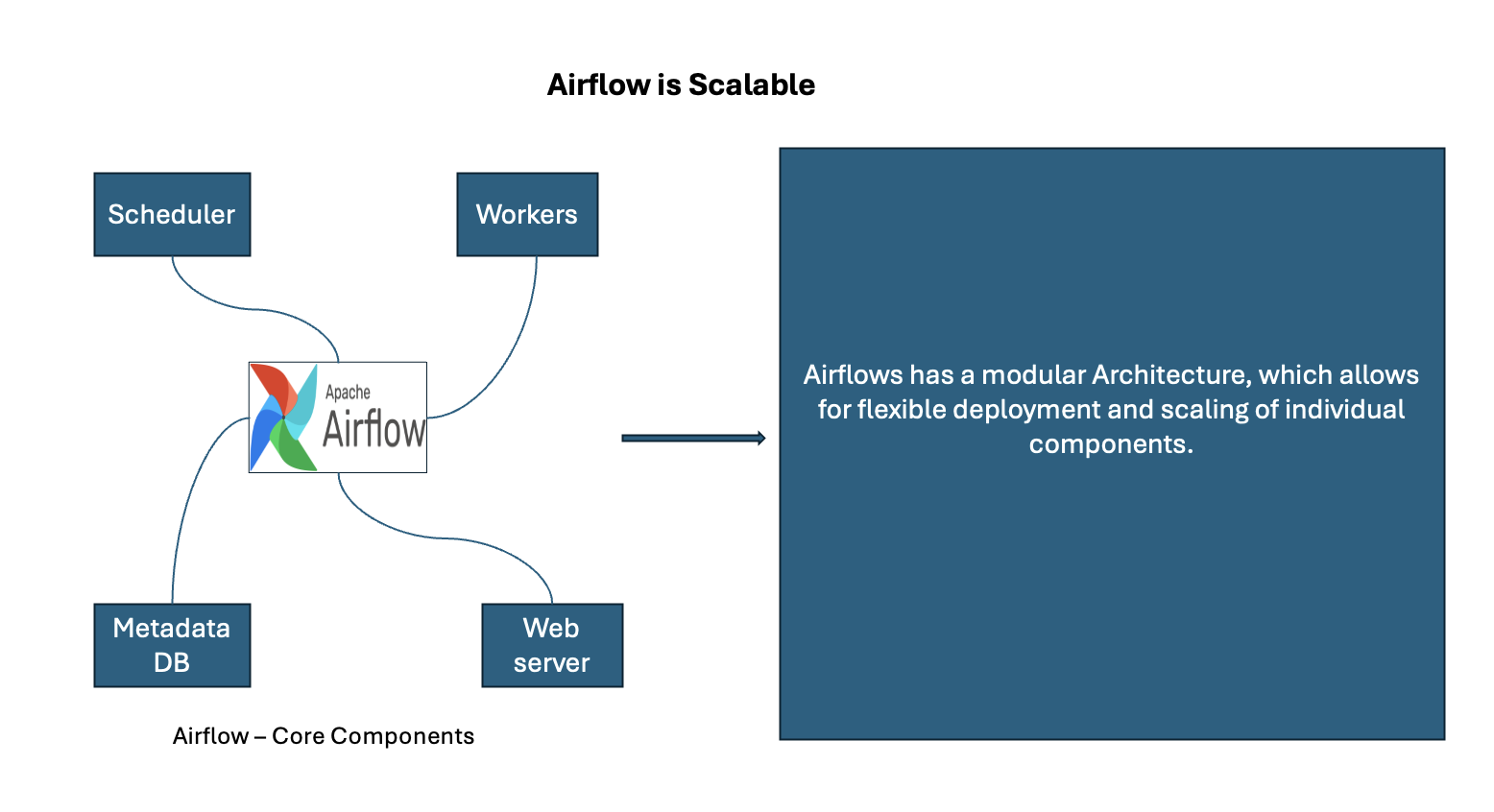

Scalable

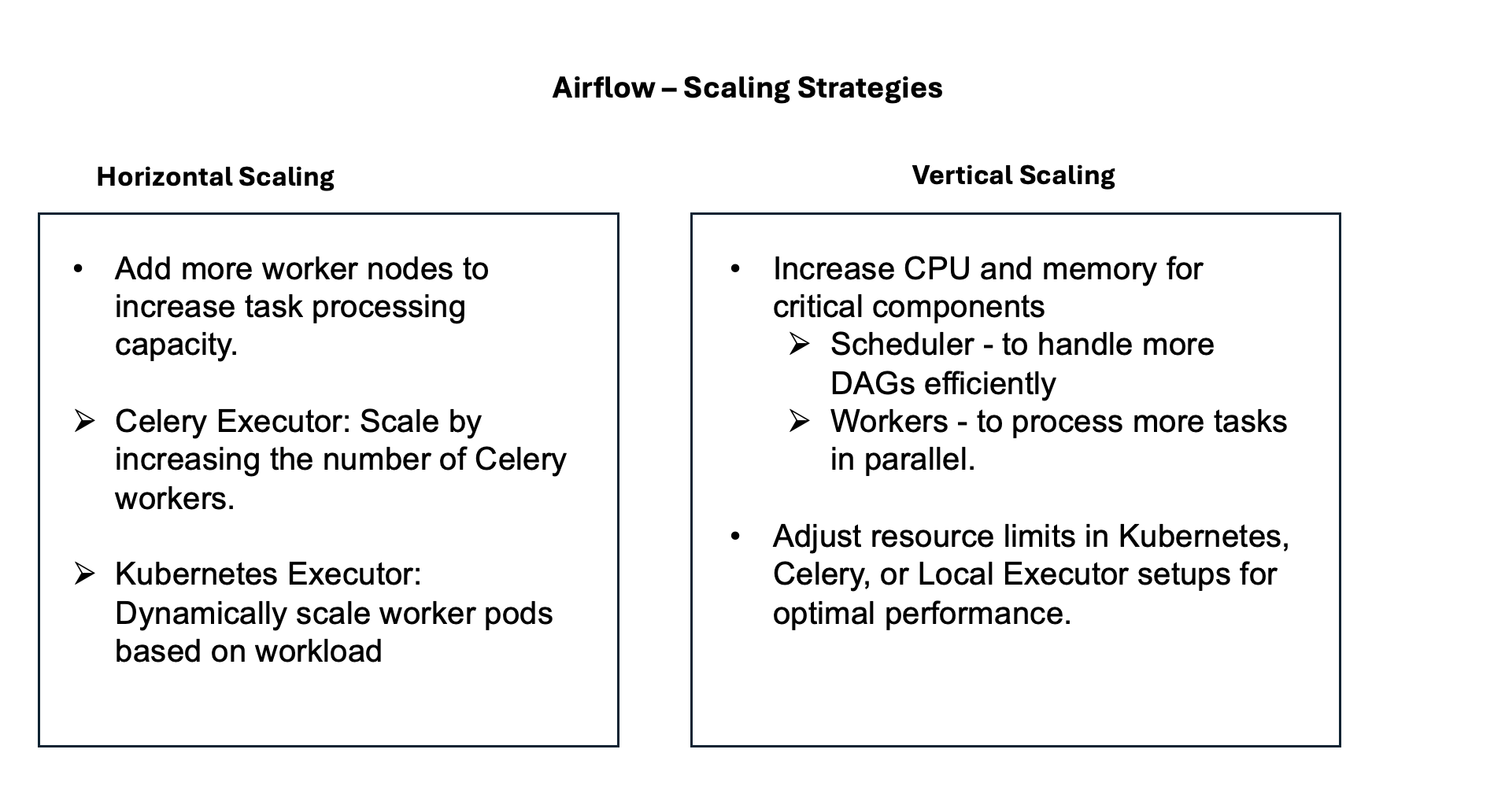

Scaling Strategies

Dynamic

Extensible

Elegant

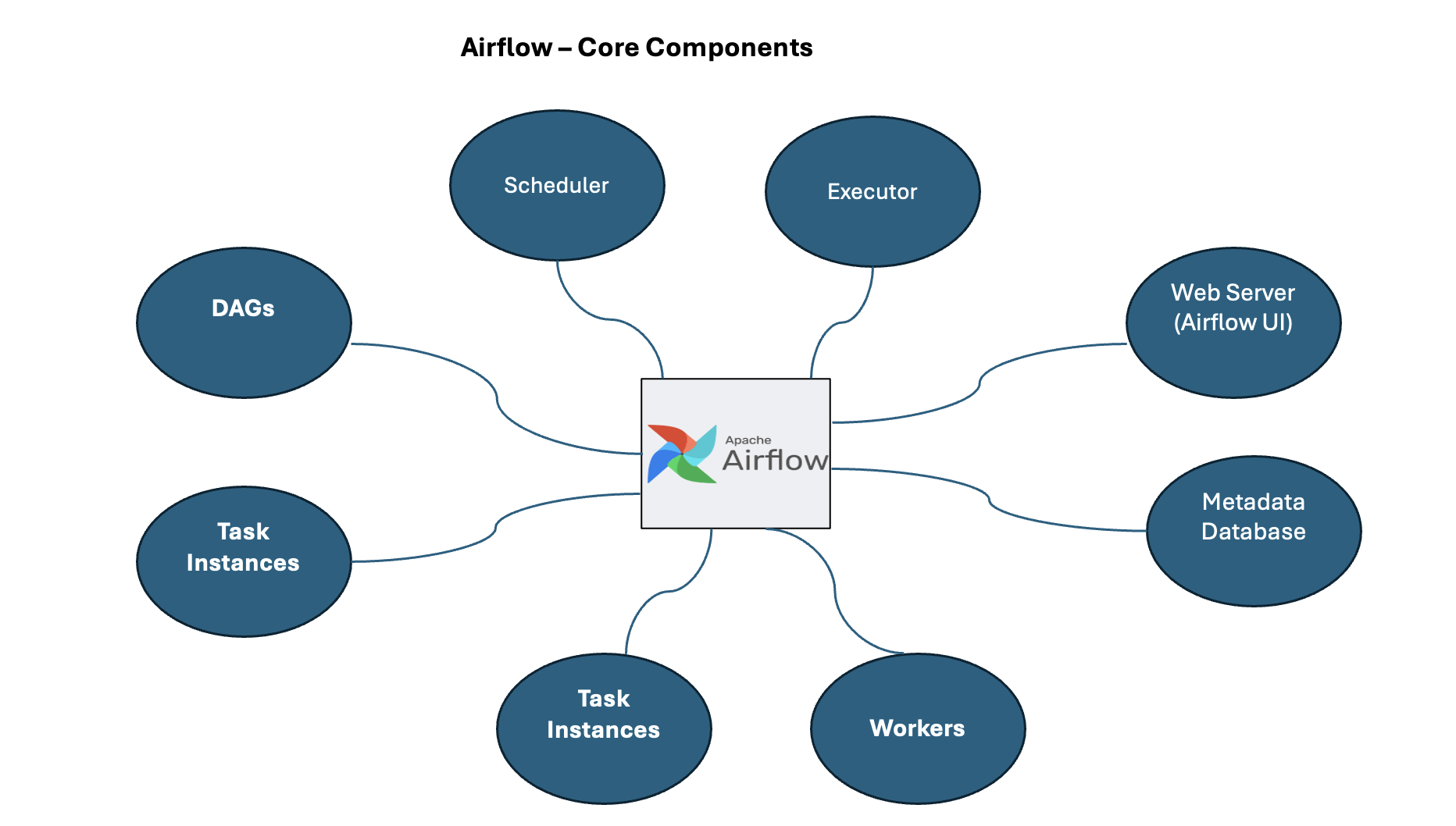

Airflow - Core Components

Airflow Features

Airflow Integrations

Airflow Providers

Airflow Docker stack

Airflow is a platform to programmatically author, schedule and monitor workflows.

Developed initially by Airbnb in 2014 and later donated to the Apache Software Foundation, Airflow has become the de facto standard for workflow orchestration in the data engineering ecosystem

Airflow allows you to create :

custom operators

custom sensors

hooks

plugins

This helps extending Airflow functionality while also helping to integrate with any system, define new abstractions, and tailor workflows to your environment seamlessly. 🚀

Apache Airflow provides following features:

Pure Python

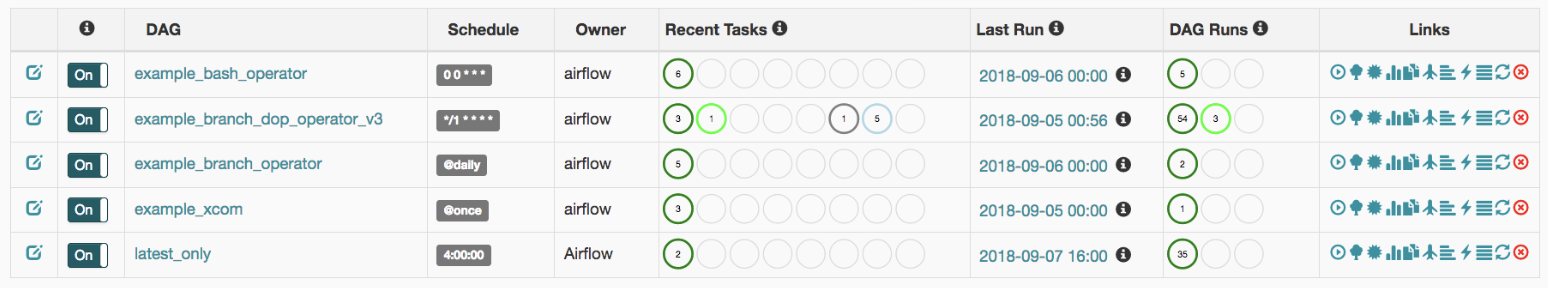

Useful UI

Robust Integrations

Easy to Use

Open Source

No more command-line or XML black-magic! Everything is Python:

create workflows

extend

python libraries

scheduler, executor and workers run Python

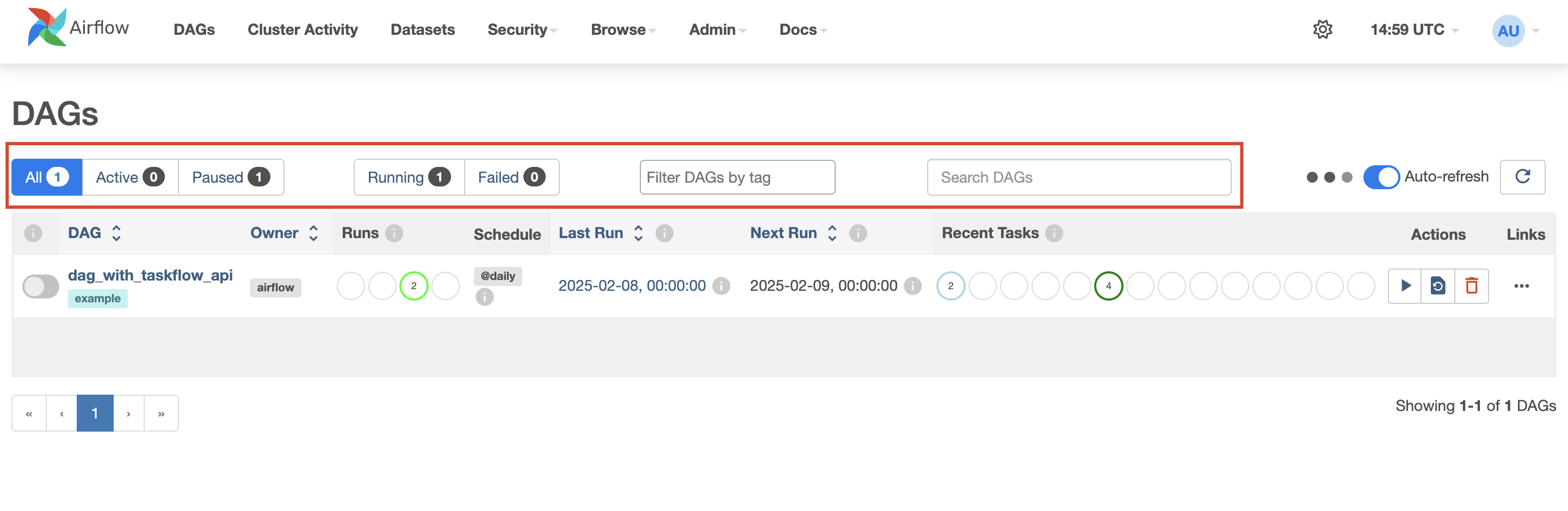

all dags

active dags

paused dags

running dags

filter by tag

filter by name

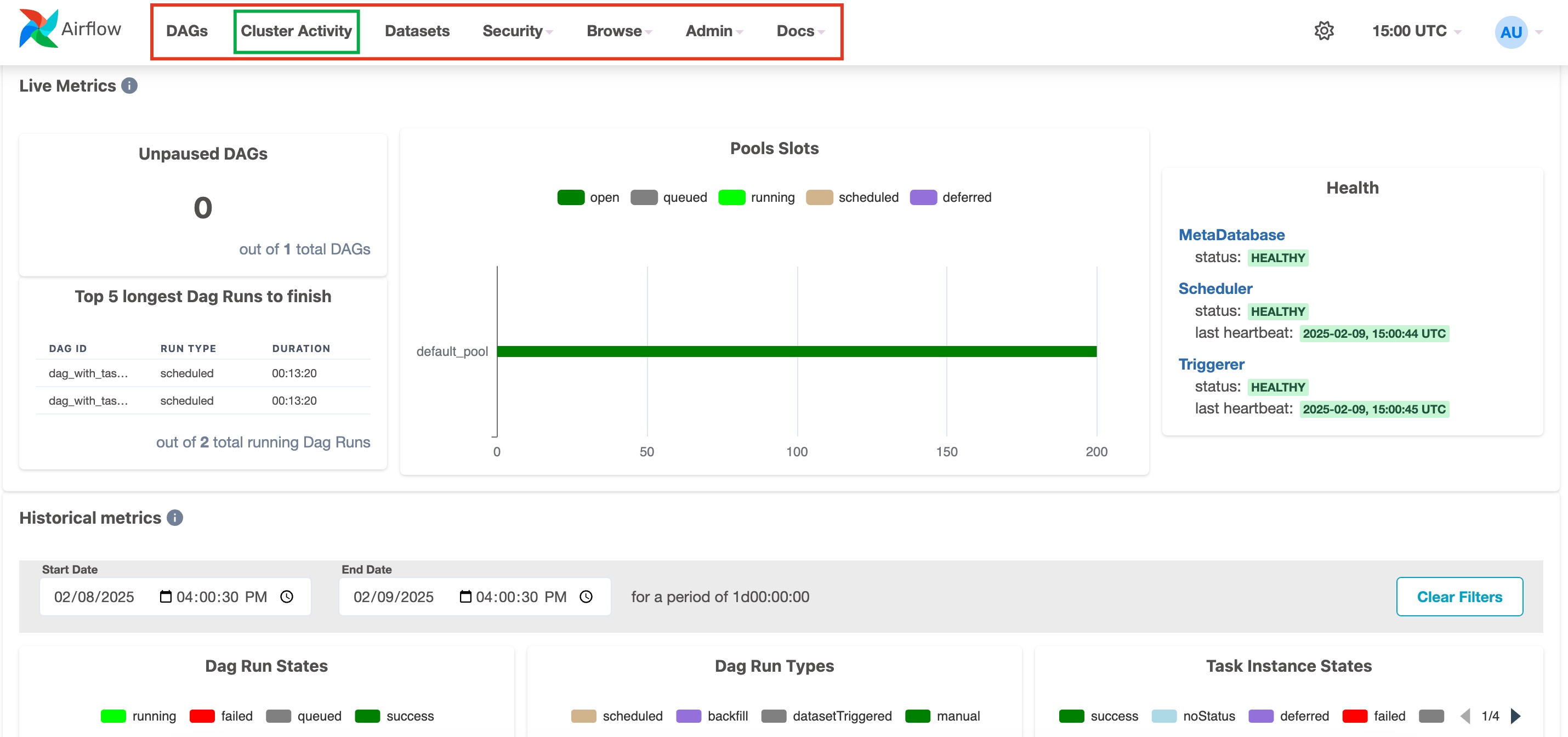

Worker Status

Task Distribution

Queue Health

Executor Performance

Debuging

Airflow offers robust integrations with :

Cloud Platforms

Databases

BigData Frameworks

Anyone with Python knowledge can deploy a workflow. Apache Airflow does not limit the scope of your pipelines; you can use it to build ML models, transfer data, manage your infrastructure, and more.

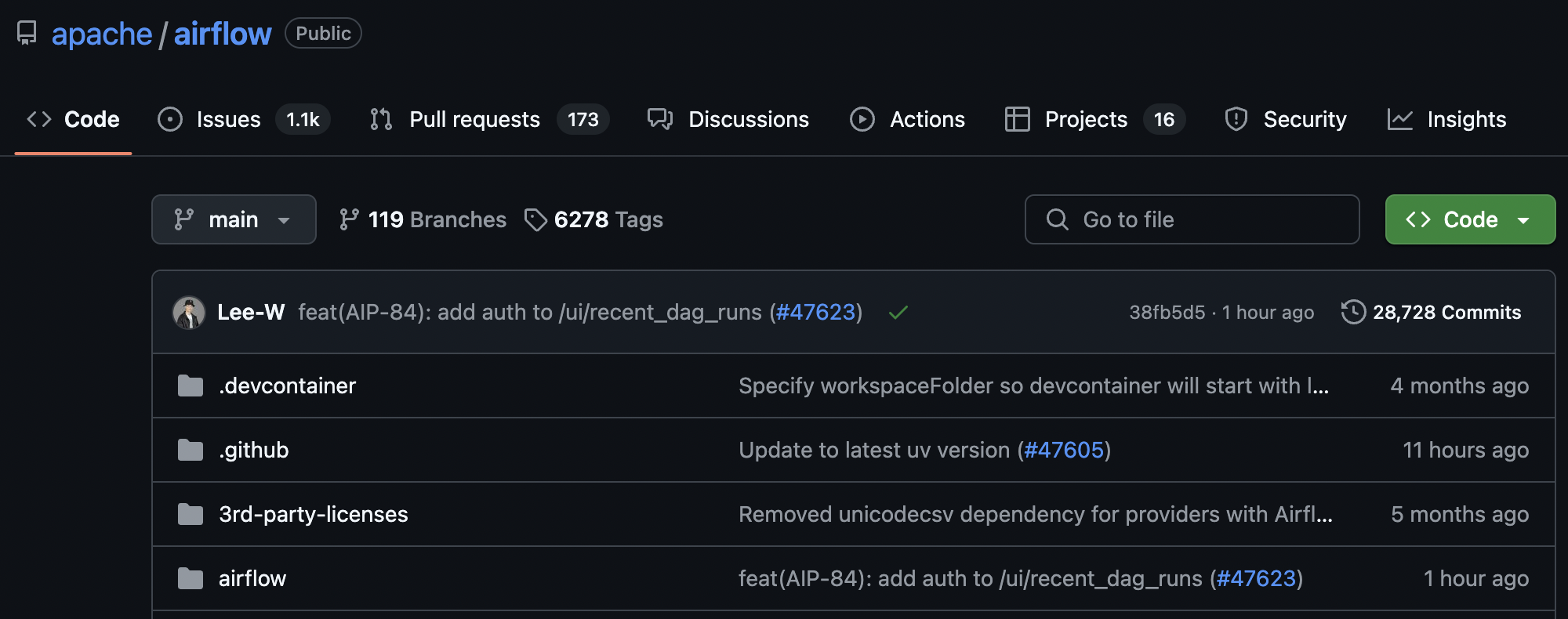

Last commit: 1 hour ago

Total commits: +28k

Wherever you want to share your improvement you can do this by opening a PR. It’s simple as that, no barriers, no prolonged procedures. Airflow has many active users who willingly share their experiences. Have any questions? Check out our buzzing slack.

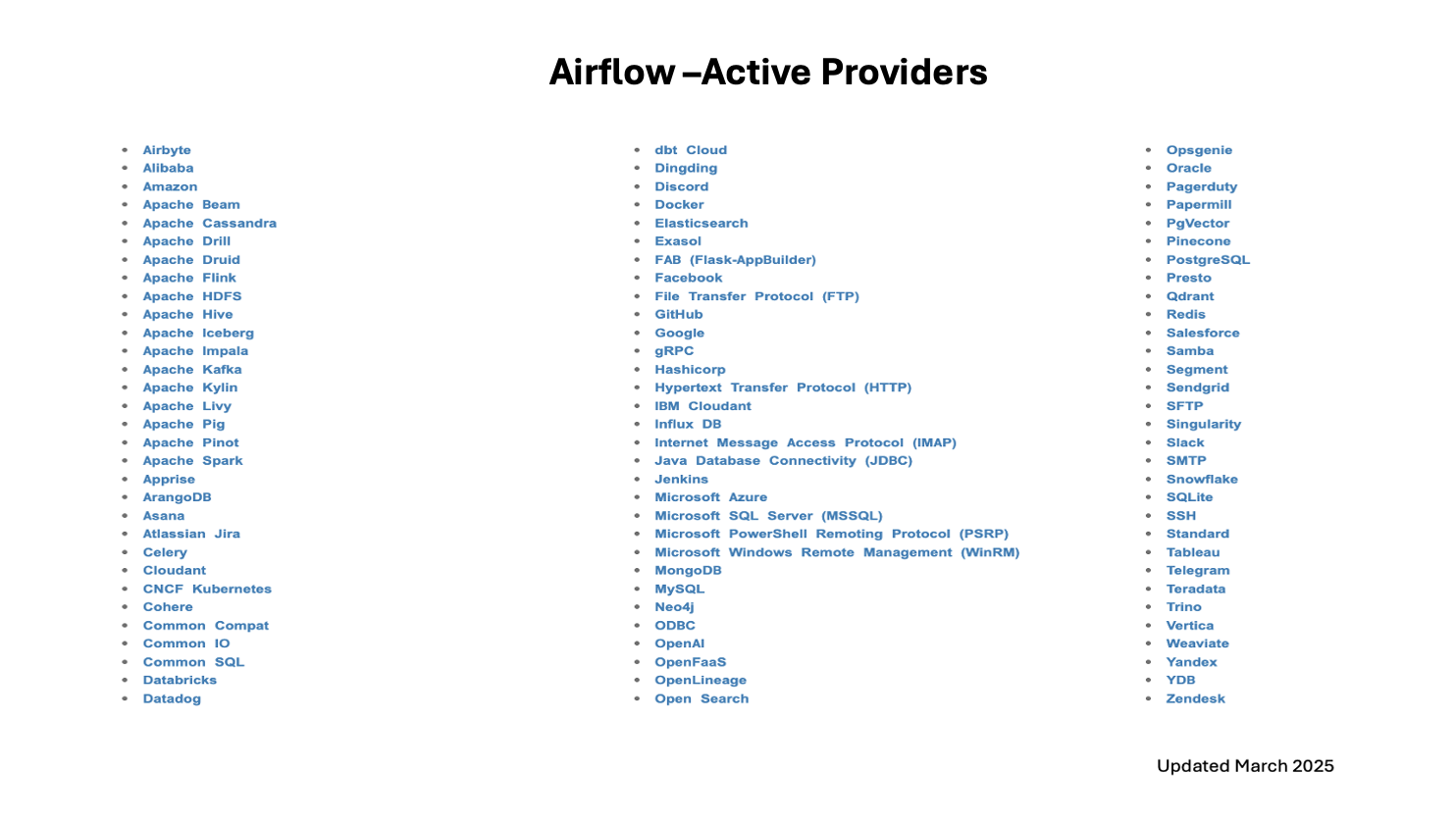

Airflow has 80+ providers packages includng integrations with third party integrations. They are updated independently of the Apache Airflow core. The current integrations are shown below

Airflow has an official Dockerfile and Docker image published in DockerHub as a convenience package for installation. You can extend and customize the image according to your requirements and use it in your own deployments.

Refer official documents on Apache Airflow here:

Airflow Documentation: https://airflow.apache.org/docs/

Airflow Usecases: https://airflow.apache.org/use-cases/